AI and Neuroscience - Part 2: Researches in AI can absorb knowledge from neuroscience

In this second part of "AI and neuroscience", we will tell about some aspects of the human brain on which Deep learning (DL) has already been based and more importantly, some that have not been adapted to Deep learning (DL) yet. This post is mostly formed on the paper [1].

The idea of brain circuits is an inspirational source for the development of DL. In particular, the concept of neurons in DL is acquired from the structure of our brain and the model weight/parameter idea is a simulated version of synapses. The main goal of most current neural network models is to tune these weights on the basis of training samples so that the desired output can be got. A trained model is said to be good if it has a good ability of generalization which means when the training stage is completed, this model still has good performances/results on new samples.

According to this paper, when compared with the primate visual system, the deep networks may not capture the information of the cognition stages (later layers) as well as information of the processing stages (earlier layers). Analogous to DL, the reward mechanism of Reinforcement learning (RL) is also based on brain computations. DL and RL can even be combined to improve results in some fields (for example, beating human champion in playing game).

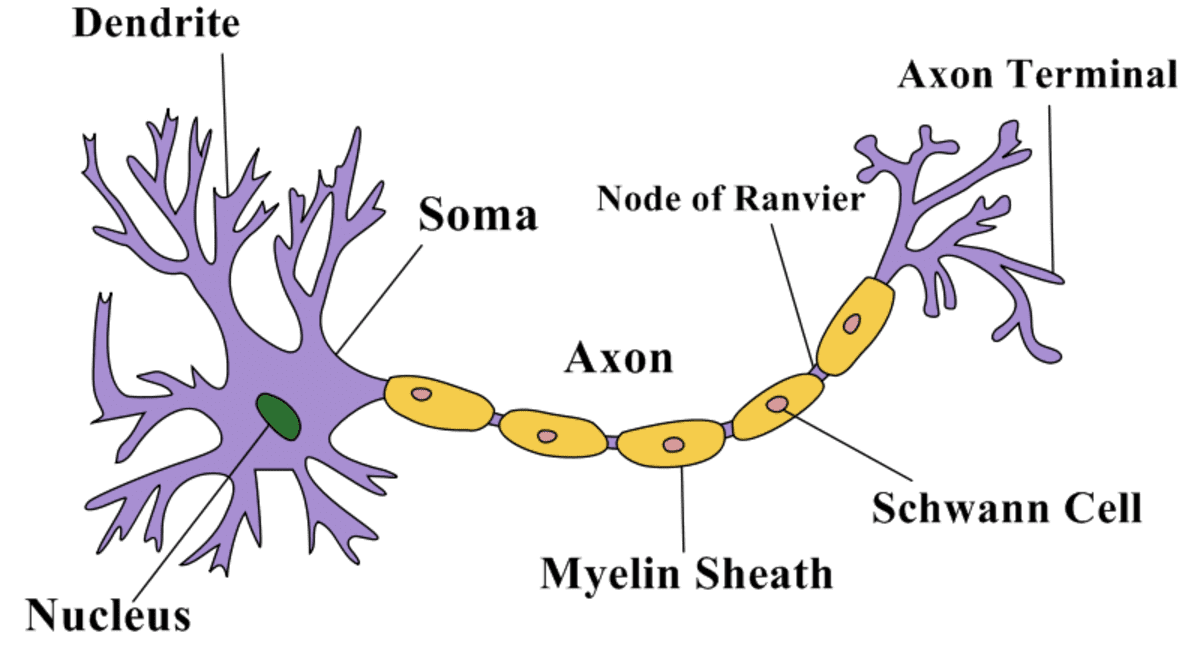

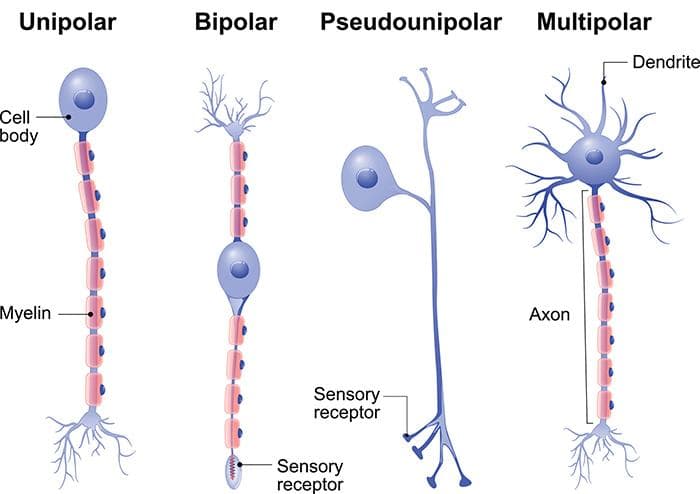

Some brain procedures that have already been taken advantage of by DL are the normalization across neuronal groups, the spatial attention, ... However, as a matter of course, there are some points that still have not been brought to DL yet. Especially about neurons, their current styles in DL are not as varied as in the brain and neither are their structures and interconnectivity. You should look at the two figures below for more information about the structure of a neuron (Figure 1) and the types of neurons (Figure 2) in the human brain.

Figure 1: The structure of a neuron (Image from [2]).

Figure 2: Types of neurons (Image from [3]).

From the two figures above, we can see that the "true" neuron is very complex and diverse in terms of structure and type, while the current DL neuron is quite far from it. As we know in DL, all the neurons are homogeneous with a highly simple structure.

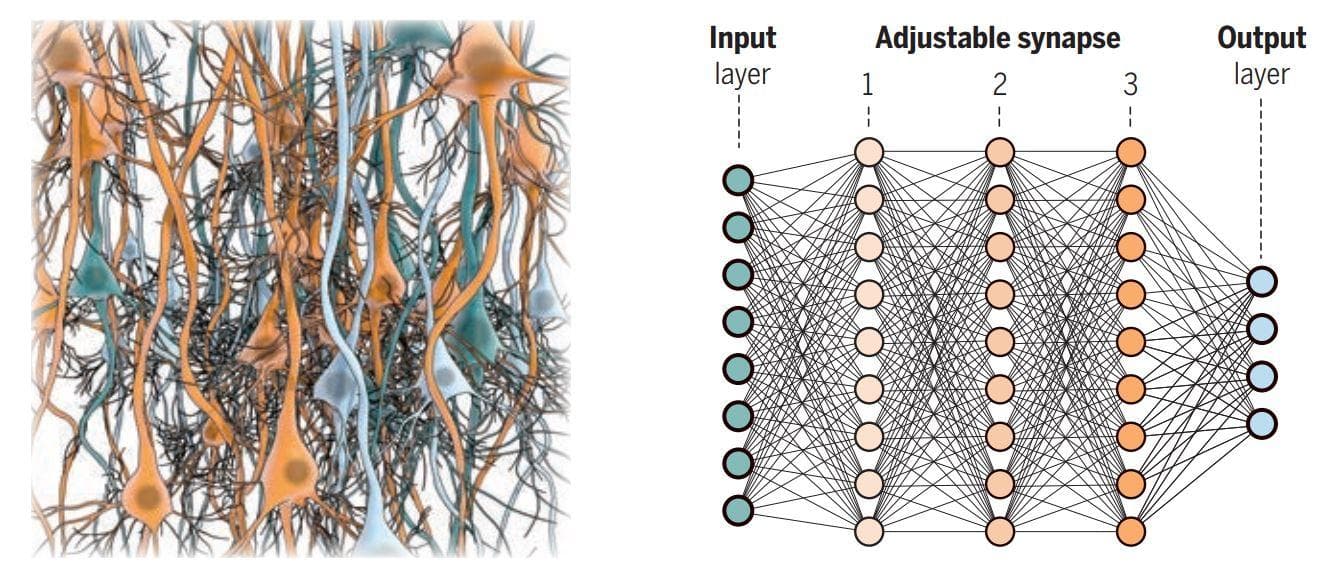

Regarding the connectivity between these neurons, the author has a very notable recognition: "cortical circuits in the brain are more complex than current deep network models and include rich lateral connectivity between neurons in the same layer, by both local and long-range connections, as well as top-down connections going from high to low levels of the hierarchy of cortical regions". To have more details, you should look at the figure below.

Figure 3: Complex human neural network vs AI neural network (Image from [1]).

There are two major open questions that should be concerned. One is whether the current deep neural networks with abridged structures are enough to achieve human-level cognition. If not, it is also necessary to know to which extent the current models have come close to the "real" human performance.

As mentioned in part 1, the innate structure may also be one of the most important factors that explain how to achieve general AI. In other words, it is about the roles of nativism and empiricism and the balance between them. Empiricism here means that the performance of a model depends on how it learns from experience. In opposite, nativism is related to the innate structure/mechanism of the brain which has been available at the initial stage without learning. Current AI models stand more obliquely at the side of empiricism, which learns to extract useful patterns from the inputs/samples (usually a great number of inputs/samples).

This, as we all know, is completely different from how humans reach cognition. For example, babies develop their perception early in just several first couples of months. In addition, to recognize something, we only have to look at one or two samples and can point out exactly the new things also in that category. These truths immensely support the prospective effect of using unsupervised learning or self-supervised learning.

With all the information given, it is essential to emphasize once more time the role of neuroscience as a reliable source for improving Deep learning. Some researches should be carried out to assess what aspects of the human brain that can constitute human-like machine intelligence.

References

[1] Shimon Ullman, Using neuroscience to develop artificial intelligence, Science, 2019.

[2] Structure of a neuron, Owlcation.

[3] Types of neurons, Queensland Brain Institute, The University of Queensland.