AI and Neuroscience - Part 3: How can deep learning help neuroscience

From part 2 of this series, we have known that the neuroscience of the human brain is the inspiration behind deep learning. In this post, we will drive the topic in an opposite direction, which involves how deep learning can support the research of neuroscience theory. As a satisfactory side effect, you will definitely also gather the basics of deep learning in a concise and favorable way.

This post is written with the main reference to the content of the Nature neuroscience journal titled "A deep learning framework for neuroscience" [1].

Neuroscience is a challenging field and it has not uncovered all the mystery in our brain yet. Its research works are often related to finding out the underlying activities of neuron responses and circuits. These researches are carried out by following some preceding defined frameworks. Artificial intelligence, especially deep learning, is also considered one of the frameworks.

3 core components of artificial neural network

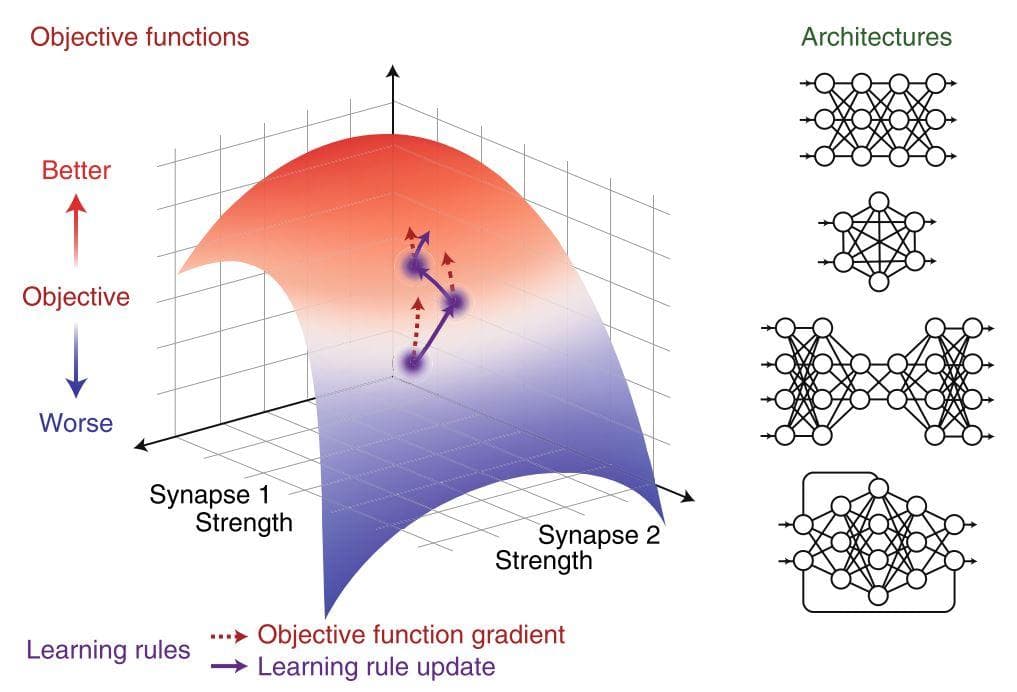

According to this journal, in a design of one artificial neural network (ANN), there will have 3 core components:

- Objective functions (or cost functions): express learning goals, need to be maximized or minimized.

- Learning rules: the paradigms to update synaptic weights, how a model learns.

- Network architectures: the number of layers, the number of neurons in each layer, how layers are arranged, how layers are connected, ...

Figure 1: 3 core components of ANN design (Image from [1]).

No need to specify computations as "handcraft" methods, deep learning researchers typically just need to focus on these 3 elements. However, it is still the role of humans that is to define these three components by hand.

Neurons in ANN correspond to neurons in the brain and layers in ANN are mostly equal to brain regions. The lowest layer is for learning low-level features such as points, edges; then following layers will learn features in a gradually increasing level manner until the last layer acquires, for example, the shape of the object. All layers contribute to the success of the whole network.

Cues that exhibit the role of AI/DL in neuroscience research

Deep learning can act as a stake for developing theories in neuroscience. In fact, the representational transformations inside primate perception, behavioral phenomena and neurophysiological phenomena ("grid cells, shape tuning, temporal receptive fields, ..." [1]) have been shown to be possibly simulated by a deep neural network (DNN).

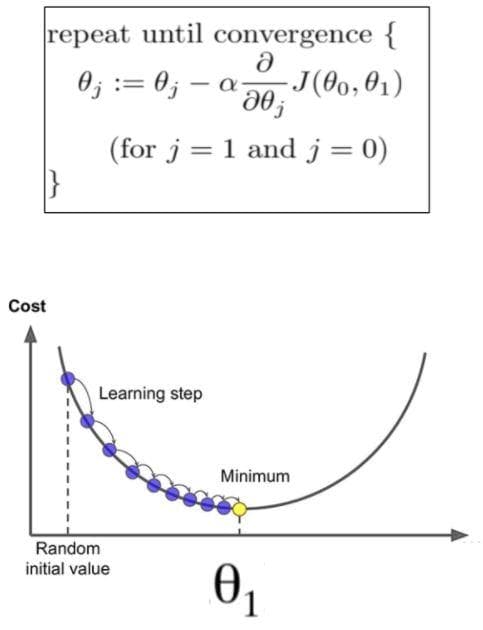

The objective function, which is one of the three components mentioned above, evaluates how close an ANN has achieved the optimal goal. The learning happens by updating weights/parameters of a network with respect to the gradient of the objective function. The value of each weight is modified according to the gradient of the objective function at its current value. This paradigm is thought of as a "praise/criticize" mechanism so that no matter which value a weight has, it should be updated to improve the gradient of the objective function. You must have known this from basic machine learning courses. The important question here is whether the brain also operates like this gradient-based method.

Figure 2: Gradient descent (Image from [2]). y-axis is the cost, which represents the value of the objective function. x-axis represents the value of a weight/parameter.

For example, in the figure above, if the weight value is on the left side of the graph (e.g. at the random initial value) then the gradient of the objective function will be negative. According to the update equation, we need to subtract the current value of the weight by the value of the gradient. And because the gradient is negative, so it looks like we update the weight by "add" the gradient part. Similarly, if the weight value is on the right side of the graph, we need to update the weight by "minus" the gradient part. That is why we call this gradient descent a "praise"/"criticize" mechanism like above ("add" is "praise", "minus" is "criticize").

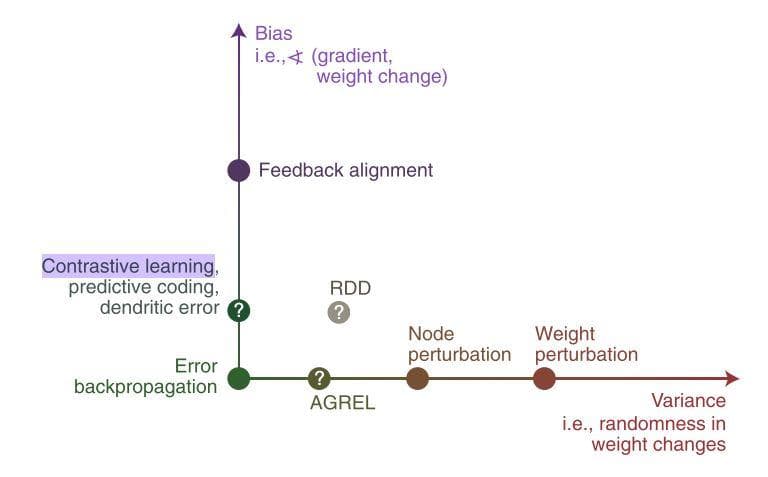

One of the ways to calculate gradients is to use backpropagation, but it does not "obey" the biological plausibility assumptions. In contrast, there are some other ways to estimate gradients, which concurrently "obey" these biological assumptions. All of these approaches of gradient-based weight update have another way of calling which is learning rules. Recall that the learning rule is one of the three core components to assess when researchers design an ANN. But as you may know: estimation always comes with the problem of bias and variance. Some algorithms in this category are weight/node perturbation, random feedback, ... There are also many solutions proposed to decrease the problem of bias and variance in these algorithms while still preserve the property of biological compliance.

Figure 3: Bias and variance in learning rules (Image from [1]). The question marks here show that there is uncertainty in these positions. RDD: regression discontinuity design. AGREL: attention-gated reinforcement learning.

Note that this is just a sketch and does not have the exact values of bias and variance, just relative positions.

From the attributes above, we can be somehow persuaded that a deep learning framework for conducting research in neuroscience is totally possible. Though there are some uncompleted simulation parts of the brain in the ANN itself, it is clear that ANN-based models share the equity of behaviors with the brain.

Moreover, the understanding of ANN does not stand still here forever, there will be postponence but it has been planned to keep growing thanks to sub-research domains such as explainable artificial intelligence (XAI). The exploration of ANN does have a certain meaning in the experimentation of neuroscience systems.

AI set, Brain set, and Inductive biases

No single network, model, architecture, or algorithm has enough superpower to be adapted to all types of problems.

Before coming to what inductive biases is, let's first have two terms defined:

- AI Set: the set of perception tasks (detection, classification, ...) that are easily made by animals is considered to be simple enough for the initial stage of AI research.

- Brain Set: the set of tasks about animal behaviors chosen by neuroscientists for carrying out research about the brain. It partially shares with the AI Set.

The term "inductive biases" in the context of designing an ANN means researchers have provided their knowledge to the details of network architecture. Thus, each problem/task in DL is formulated with a specific architecture of the neural network that is specially designed for that task by the prior knowledge of humans. This is called inductive bias because there is a bias in choosing the architecture, a bias of incorporating human knowledge. Therefore, learning is supposed to be easier which leads to the recent successes of deep learning. This also supports that not only because of increasing computations that makes DL flourish, but the design of inductive biases is also an significant factor.

Figure 4: Animal behaviors (Image from [3]).

This might possibly happen in the animal brain with an additional influential factor that is habitat. One more point that fortifies the use of a deep learning framework for neuroscience. Inductive biases, therefore, should be accordingly utilized for both the AI Set and Brain Set. With inductive biases, some misconceptions can even be dispelled. For example, to argue with the idea: "deep networks require a lot of data to learn, which is different from the brain", one can give out two reasons according to [1]:

- "many species, especially humans, develop slowly with large quantities of experiential data"

- "deep networks can work well in low-data regimes if they have good inductive biases"

Now, you must have recognized the significant contribution that AI may bring to neuroscience. But if not, it is okay too because if we surely know about what will happen then that is not research.

References

[1] Blake A. Richards, A deep learning framework for neuroscience, Nature Neuroscience, 2019.

[2] Clare Liu, 5 Concepts you should know about gradient descent and cost function, KDnuggets, 2020.

[3][10.3: evolution of animal behavior](https://bio.libretexts.org/Bookshelves/Introductory_and_General_Biology/Book%3A_Introductory_Biology_(CK-12)/10%3A_Animals/10.03%3A_Evolution_of_Animal_Behavior), Biology LibreTexts, 2021.